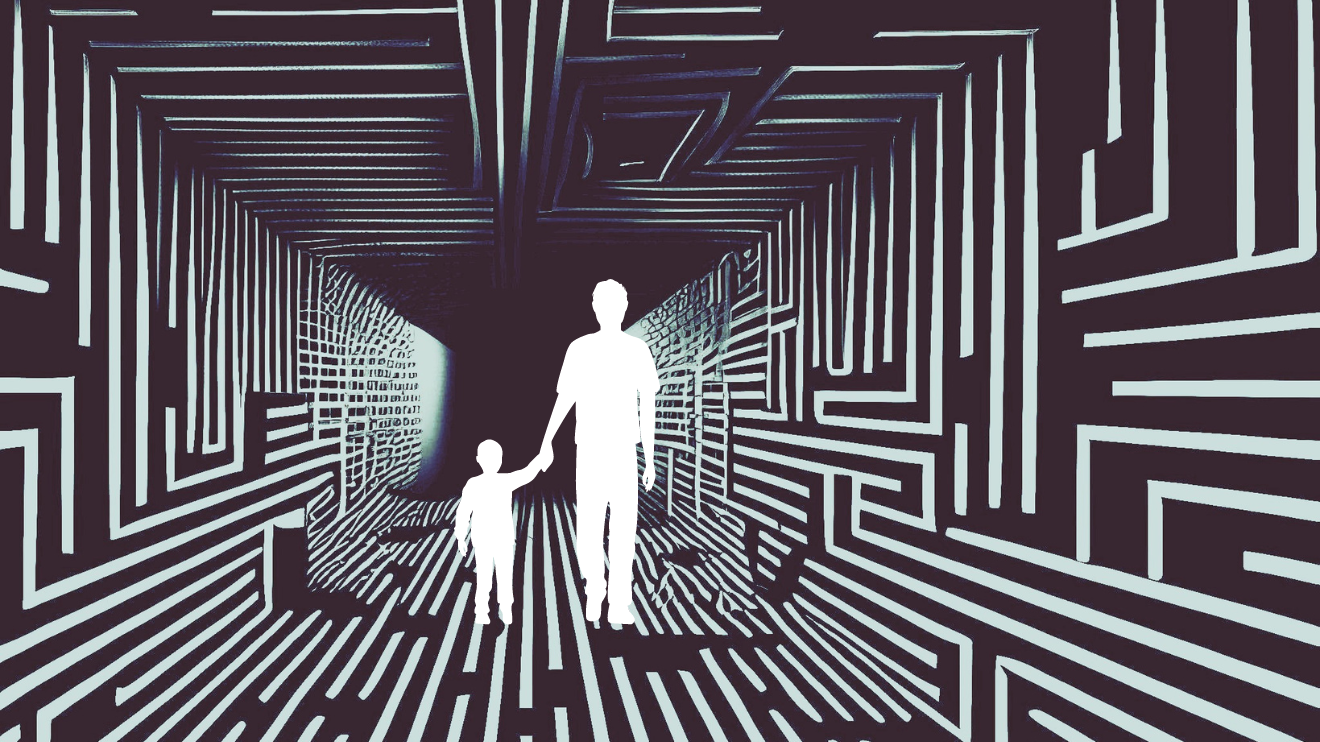

Why you (must not) make predictions about the world

Prepare, don't predict.

Feeling blah and craving an adventure? Here's an idea: Go ask Google how artificial intelligence will change the world. Then brace yourself for the roller-coaster.

Says Forbes: Advances in AI are enabling researchers to better understand everything from the cosmos to the human body.

Time magazine declares: We are facing a step change in what's possible for individual people to do, and at a previously unthinkable pace.

Lest you get too carried away with these romantic fantasies, here's a reminder from Goldman Sachs: AI will eliminate the equivalent of 300 million full-time jobs.

We are drowning in predictions about how AI will upend everything we know and hold true. I wasn't around during the industrial revolution, but I can imagine it was a time of similar forecasting fervour, albeit without the magnifying influence of the internet.

If you, like me, thought 'large language model' = bigger font size when you first heard the term, you probably find this deluge of predictions discombobulating. Throw predictions about climate catastrophes, deepening polarisation, and global-scale conflict into the mix, and you'd be forgiven for feeling batshit bonkers. I've often tried sitting at my desk and asking myself "What is the world coming to?". Each time, I couldn't resist getting up within two minutes and eating a banana to preempt an anxiety attack.

As my therapist never fails to remind me, every feeling is an invitation to dig deeper. What is this particular moment in history, and our reaction to it, trying to teach us? Why do we make predictions, even though we mostly suck at it? And how do we soothe ourselves in the aftermath without resorting to sugar highs?