Therapy's 'human touch' is nearing its sell-by date

Human-ness was therapy's biggest defence against AI. It may not be enough anymore.

Support my work from India

Or from elsewhere in the world

A few weeks ago I wrote an article on the deepening crisis in psychotherapy, drawing parallels with the failures of my former industry, mainstream journalism. In the piece I argued that therapy will pay dearly for its neglect of the rapid incursion by artificial intelligence-based tools as the confidante to our inner lives. This isn't hyperbole: In April, Harvard Business Review announced that in 2025, the top three reasons people are using generative AI tools such as ChatGPT include 👇🏾

2. Organising life (new use that didn't exist last year)

3. Finding purpose (new use that didn't exist last year)

Source: HBR

Despite this earthquake playing out in real time, the mental health sector isn't talking nearly enough about developing technological awareness and competency among professionals. Younger patients could well be moving wholesale to clinically unsound AI 'therapy' platforms as their first port of call, bypassing trained human therapists altogether. But the establishment discourse around therapy remains wedded to the romance of the 50-minute in-person session, entirely absenting the profession from the technology conversation. This stodginess has allowed tech giants, as well as VC-funded upstarts, often with little respect for the intricacies of mental health care, to dictate the future of the industry.

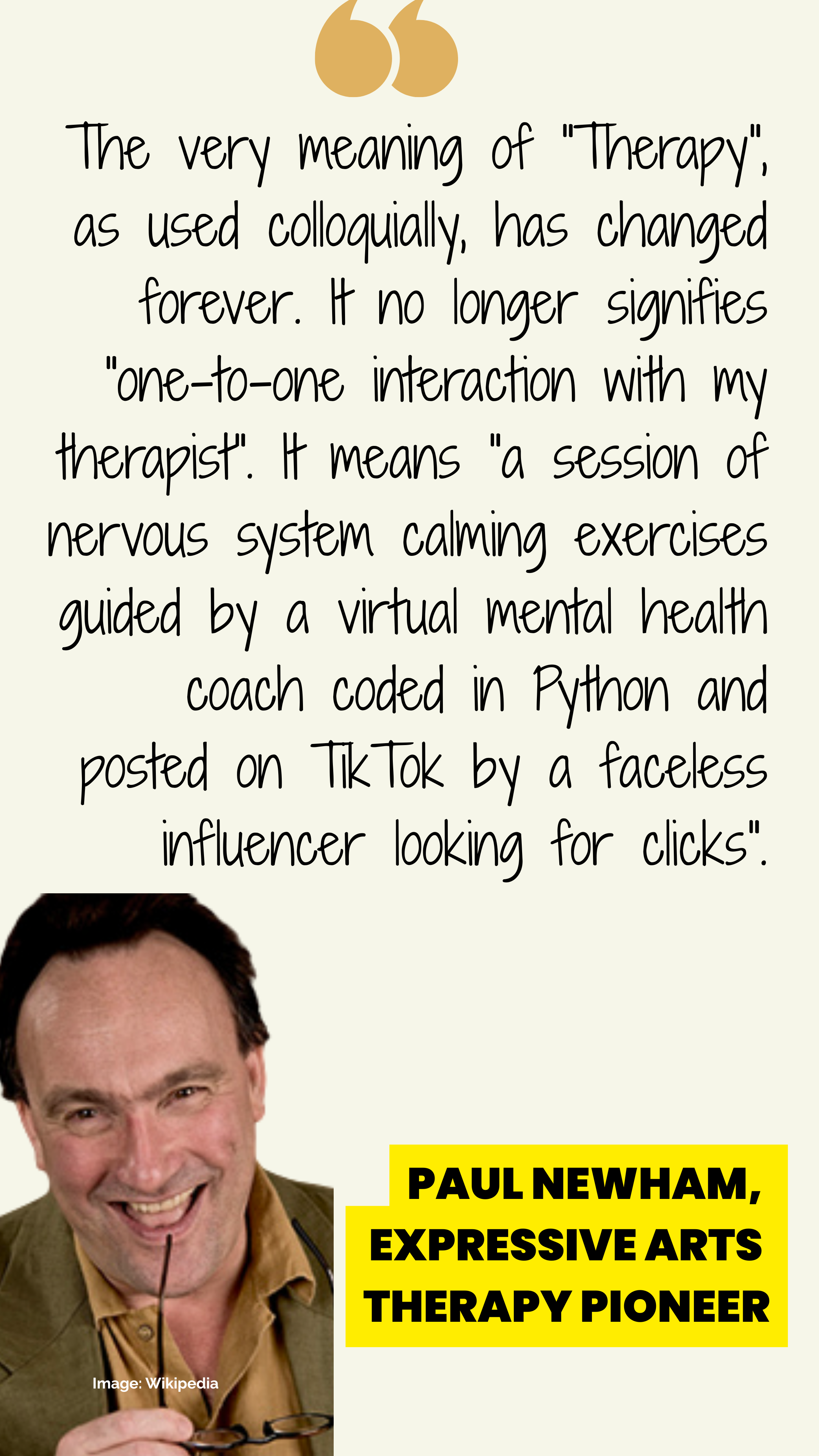

I didn't expect my despair to find an ally in a venerated figure from modern psychotherapy – Paul Newham, the man credited with a revolution in expressive art therapies. His assessment of the gatekeeping in the field was so damning, in fact, that it made my angst sound like a child's plaint.

"Forty years of various roles within the sphere of psychology and psychotherapy have taught me to know not only how right you are but how shamefully quiet we all are on this subject," Newham said in his response to my piece. "Younger so-called potential service users have long since given up caring about legacy credentialing and have found ways to escape becoming victims of protectionism. It started before AI bots. The prolific reach of user-created modalities like ASMR – which has afforded repetitive temporary relief to millions on a waiting list for six sessions of CBT – is an example. Psychotherapy was late to the table in accepting it needed scientific evidence for its musings on the mind. It is equally late to understand the true nature and potential of AI."

Then, Newham dropped the loudest but most elegant truth bomb on how users/clients/patients are de-pedestaling human-delivered, capital T therapy in favour of its TikTok or ChatGPT mutation:

If tech supremacists rant about the inefficiencies of human therapy (hard to access, too expensive, not trustworthy), you can pass it off as a conspiracy to hijack the latter's 'customers'. But when someone like Newham squeezes the alarm, you know it's time to evacuate the cabin called STATUS QUO. Don't bother collecting your belongings.

What's coming for therapy – nay, what has already come – is a body blow to its biggest defence against AI: the mythical 'human touch'.

We the votaries of human-administered therapy took great righteous pride in invoking the human touch when bigwigs at AI firms evangelised their therapy use case. A machine can never replace a human, we yelled ad nauseam. It can't replicate transference, countertransference, and the therapeutic alliance that fuels the magical work of healing between two strangers.

We were right: It can't. But if you listen to a growing population of lay users and tech experts, it doesn't need to.

AI is doing "what it is meant to"

Vasundhra Dahiya is a doctoral scholar in Digital Humanities at the Indian Institute of Technology, Jodhpur. Her research domains include critical AI, sociotechnical research, and algorithmic accountability. She questions: How much does this human touch or connection actually matter for people who are already resorting to AI-based tools for everyday talking and guided therapy?

"For an average user seeking therapy/refuge in an automated conversation, [AI's ability to] mimic human responses is good enough reason to chat with a bot," she says. "The automation is facilitating what it is meant to."

In the Nature paper at the beginning of this piece, led by Sanity community member Steven Siddals, a 17-year-old AI user from China narrates a deeply emotional encounter that, even a couple of years ago, would have been hard to visualise outside the therapy room:

"Sometimes I cried really hard during the process… and it listened and just we figured out a lot of feelings… after a few months, when I go to school I felt a difference. Like wow. Like my body’s belong to me… I really felt so liberated."

Of course, an AI model doesn't understand meaning or context, Dahiya will remind you. It just learns to identify patterns and respond with likely acceptable responses to a user's query. Nor can we afford to forget for a second that there are stories out there that allegedly show AI's diabolical power to push vulnerable people down abominably dark paths. But human therapy has abused that exact same power for decades, often without accountability. When you overstate the value of human touch, you erase this past and glorify human intervention as the gold standard of mental health care. You presume that therapy is pristine and does not have huge skeletons in its own closet.

"In fact, one of the main reasons people would rather talk to a bot than a human therapist is to ease the help-seeking processes, [given] the general mistrust of trained human therapists," Dahiya adds.

In two of the more popular pieces from the early days of Sanity, I had challenged the false high ground that therapy takes to differentiate itself from 'harmful' psychiatry. You can find the field echoing many of those same arguments in its fight against its new adversary: AI.

What questions are we forgetting to ask?

Dahiya says that when we get sucked into this binary of human v AI, we get distracted from asking core questions, such as:

- Why this mimicking of the human tone by automated tools is working or not working for certain people,

- How and who does it serve, and who remains excluded from its miracles (to begin with, those without tech literacy or access to these tools and people with severe mental health conditions), and

- What deficiencies in our conventional mental health care infrastructure has given AI this clout to begin with. I asked this exact question in 2021, when I said that the mental health tech debate is not about tech at all – it is about systemic and structural failures.

To this list I'd add a fourth question: How is AI changing the very idea of 'connection' in therapy and beyond?

According to Psych Central: "Human connection is the sense of closeness and belongingness a person can experience when having supportive relationships with those around them. Connection is when two or more people interact with each other and each person feels valued, seen, and heard. There’s no judgment, and you feel stronger and nourished after engaging with them."

But when you 'connect' with a bot, there is no obligation on you to make your interlocutor also feel 'valued, seen, and heard'. In fact, you cannot. What is this radical remapping of the idea of connection doing to us?

At the moment, the overwhelming majority of stakeholders in therapy seem to be maintaining a strangely stoic silence on these questions. This part-denial, part-bewilderment has to end.

"You will need to inject a seriously potent cocktail of humility into the veins of the therapy profession to get therapists to recognise this shift and start contributing to the unstoppable evolution of 'AI therapy'," Newham says. "Only then will [their] influence remain safe. Ironically, their refusal to engage, which you have highlighted, will harm the very people they claim to help."

PS: I am resharing my suggestions from my earlier piece for therapy to break out of the impasse.